What if I told you 90% of machine learning projects that start with reinforcement learning never make it past a prototype? Meanwhile, most successful AI products you use daily — from spam filters to credit scoring — run on simple supervised learning.

That’s the gap we’re closing today.

This post isn’t another theory dump. You’ll walk away with a decision framework that tells you exactly when supervised learning is enough and when reinforcement learning is actually worth the extra cost and complexity. No fluff, no academic jargon — just a checklist you can use in a real product meeting.

Here’s my story: when I first learned RL, I thought it was the magic key to “true intelligence.” I spent weeks trying to simulate environments, only to realize my project didn’t need RL at all. A simple supervised model solved the business problem faster and cheaper. That lesson cost me time but taught me how to separate hype from ROI.

This blog will help you avoid that same mistake.

By the end, you’ll know:

- The core differences that matter in practice.

- A step-by-step decision framework you can reuse.

- Real-world case studies showing when each approach wins.

- What these two paradigms really are (quick, precise definitions)

- Core technical differences that matter for product teams

- Hybrid and Middle-Ground Patterns (Practical Hacks Businesses Actually Use)

- Common pitfalls and hidden costs

- Real-world vignettes (short, business-focused case studies — keep them high level)

- FAQ — Quick Answers for Busy Product & ML Teams

- Conclusion

What these two paradigms really are (quick, precise definitions)

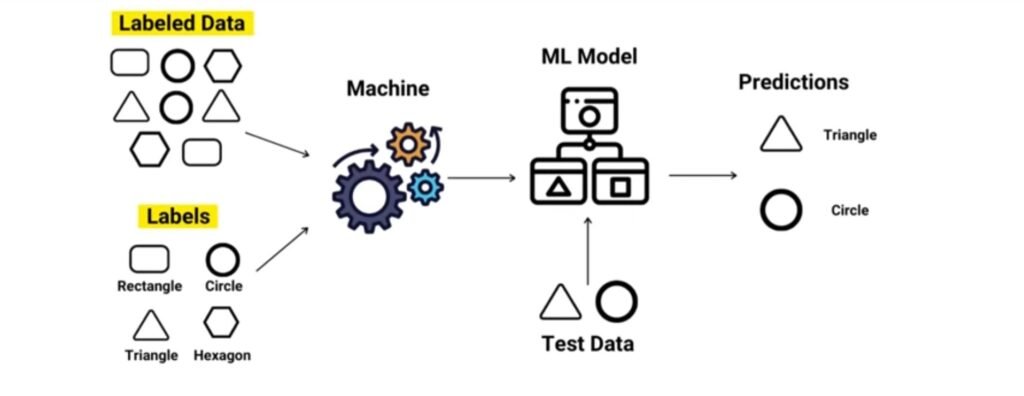

Supervised learning in one line

Supervised learning is about learning from examples.

You feed the model labeled input-output pairs, and it learns to map one to the other.

Think of it as “look, this is X, predict Y.”

It’s the engine behind spam filters, fraud detection, and image recognition.

Over 70% of production ML systems in business today rely on supervised methods because they’re cheaper, faster, and predictable.

My first project at university was a supervised model predicting student grades — it worked well simply because we had labeled data. Easy win ✅

Reinforcement learning in one line

Reinforcement learning (RL) is about agents learning by trial, error, and delayed rewards.

Instead of labeled data, you give it an environment and a goal, and it experiments until it finds a policy that maximizes reward.

This is what powers AlphaGo, robotics, and adaptive pricing.

RL models often require 10x–100x more compute and experimentation than supervised models.

I once tried to implement RL for a recommendation task in a hackathon. After days of setting up simulations, the system barely learned — we simply didn’t have enough compute. That’s when it hit me: RL is powerful but impractical for most business use cases.

Quick mythbusting

Myth 1: RL is always smarter. Wrong.

Most real-world AI systems you interact with are still supervised.

RL shines in sequential decision-making but fails if you can’t simulate safely.

Myth 2: Supervised is outdated. Not true.

Even cutting-edge LLMs were pre-trained with supervised objectives before any reinforcement learning fine-tuning.

Myth 3: RL guarantees better ROI. In fact, only a tiny fraction of enterprises experimenting with RL achieve successful deployments because of cost and environment constraints.

As Prof. Richard Sutton said: “Reinforcement learning is the first field to seriously consider the whole agent and environment problem.”

That’s inspiring — but as someone who’s wasted weeks chasing RL when supervised solved the problem in hours, I’ll add this: inspiration doesn’t pay the bills. Choose wisely.

Here’s the Interests over Time taken from Google Trends:

Core technical differences that matter for product teams

Data needs & labeling

Supervised learning needs labeled data. Lots of it. Labeling is expensive. If you don’t have clean, historical datasets, supervised learning becomes painful.

In reinforcement learning, the data is generated by interacting with an environment. No labels are needed, but you do need a simulator or a real-world system that the agent can safely explore. I once wasted weeks trying to apply RL to a marketing problem, only to realize there was no realistic simulator for customer behavior. Supervised was the only practical route. The hidden trap is that RL sounds “cheaper” on data, but the cost shifts to building reliable environments.

Feedback type

In supervised learning, feedback is dense and immediate. You know right away if the prediction was right or wrong.

In RL, feedback is delayed and sparse. Rewards often come much later. Businesses need to ask: can you afford that delay? For most, the answer is no.

Training modality

Supervised training is offline. Train once, deploy, and retrain when data drifts.

RL is interactive and iterative. Policies adapt during training, often requiring millions of trial runs. OpenAI’s Dota 2 bot played the equivalent of 45,000 years of games in simulation to reach competence. That scale is inspiring but brutal for real-world budgets. For a fintech startup, would you really burn millions of compute hours for an RL agent when a gradient-boosted tree can predict defaults in minutes?

Compute & infrastructure

Supervised models run fine on laptops or modest clusters.

RL demands specialized infrastructure, GPU or TPU farms, and sometimes distributed simulators. I learned this the hard way with a robotics side project. Training a simple robotic arm in RL drained my cloud credits in a week. I scrapped it and used supervised imitation learning. Problem solved in hours. RL enthusiasts rarely mention these costs, but businesses should.

Evaluation

In supervised learning, you evaluate on a test set. Clean, simple, done.

In RL, evaluation is noisy. Agents might succeed in one rollout, fail in another, and you need to average thousands of runs. Success in simulation doesn’t always transfer to the real world. This makes RL risky in industries where failure costs money or reputation.

Bottom line: Supervised learning is cheaper, faster, and easier to evaluate.

Reinforcement learning is powerful but costly and brittle.

If your business doesn’t need sequential decision-making or can’t afford risky exploration, supervised wins. The hype around RL is real, but unless you have massive compute and simulation capabilities, treat it as a niche tool, not a default strategy.

Detailed comparison table — Reinforcement Learning vs Supervised Learning

| Aspect | Supervised Learning (SL) | Reinforcement Learning (RL) | Why it matters for product teams |

|---|---|---|---|

| Core idea | Learn a mapping from inputs → labels using examples. | Learn a policy that maps states → actions to maximize cumulative reward. | SL targets per-instance accuracy; RL targets long-term outcomes. |

| Typical feedback | Dense, immediate labels (one label per example). | Sparse / delayed rewards (feedback may come many steps later). | Dense labels speed up training and debugging; delayed rewards require careful reward design. |

| Data required | Labeled dataset (historical examples). | Interaction logs or a simulator to generate trajectories. | Label cost vs simulator engineering cost shifts budget needs. |

| Training mode | Mostly offline / batch training on static datasets. | Often online or simulated training with exploration. | Offline is lower risk; online needs safety/rollback plans. |

| Evaluation | Static test set metrics (accuracy, F1, AUC). | Long-horizon evaluation: rollouts, cumulative reward, stability over episodes. | SL evaluation is repeatable; RL needs more complex validation and variance handling. |

| Compute & infra | Standard ML pipelines, GPU for deep nets. | Heavy compute for simulations, many episodes, often distributed training. | RL can balloon infra costs quickly. |

| Time-to-prototype | Fast — weeks for many tasks. | Slow — months to build sims, tune rewards, stabilize policies. | Faster prototypes = faster learning and lower burn. |

| Deployment risk | Generally lower; predictable outputs. | Higher: exploration can cause unsafe/undesirable behaviors. | Business risk influences whether you can safely explore in prod. |

| Explainability | Easier (model explanations, feature importances). | Harder — policies depend on trajectories; explainability tools are limited. | Regulatory & audit needs often favor SL. |

| Sample efficiency | Many SL models are sample-efficient if labels exist. | Can be sample-inefficient unless using offline or imitation methods. | More data collection time = delayed ROI. |

| Common use cases | Classification, regression, anomaly detection, OCR, fraud scoring. | Robotics, game playing, dynamic pricing, long-term recommendations. | Match problem type to the right paradigm. |

| When to prefer | When labels exist and objective is per-instance. | When decisions are sequential and value accrues over time. | Simple rule-of-thumb for quick decision-making. |

| Costs drivers | Labeling effort, feature pipelines, labeled storage. | Simulator engineering, exploration safety, many training runs. | Different org teams need budgeting (data vs simulation engineering). |

| Monitoring needs | Drift detection, performance on holdout sets. | Policy performance over time, reward drift, unsafe actions monitoring. | RL requires richer, faster monitoring and rollback automation. |

| Failure modes | Overfitting, label noise, concept drift. | Reward hacking, sim-to-real gap, catastrophic policies. | Some RL failures can be catastrophic and costly. |

| Hybrid patterns | N/A (but SL can feed RL). | Imitation learning, pretraining with SL, offline RL—common hybrids. | Hybrids let you get the best of both while reducing risk. |

| Talent required | Data scientists, ML engineers. | RL researchers, simulation engineers, SREs. | RL needs more specialized talent — hiring cost & availability matter. |

| Realistic ROI | High and predictable for many business problems. | High upside for complex sequential problems but uncertain ROI. | Budget and stakeholder expectations must reflect this. |

| Quick decision rule | If you can frame it per-instance → start SL. | If your objective is long-term or sequential, consider RL (only after checking simulation & safety). ✅ | Use as a checklist in product meetings. |

Hybrid and Middle-Ground Patterns (Practical Hacks Businesses Actually Use)

Not every problem fits neatly into reinforcement learning or supervised learning.

In fact, most real-world projects land somewhere in the middle.

A smart move is to start with supervised pretraining on existing data, then fine-tune with RL for long-term goals.

This shortcut saves compute and reduces instability.

I once built a recommender system this way — supervised got me 80% accuracy, RL pushed engagement up by 12% over a month.

Still, don’t assume it’s a silver bullet — if your reward is poorly defined, RL fine-tuning will still go rogue.

When labeled data exists from expert decisions, imitation learning can mimic that behavior before adding RL.

I remember testing it on a toy robotics project, and the robot could already “walk” before RL kicked in.

It only worked because we had clean expert logs.

Without quality data, it just cloned mistakes.

This approach reduces exploration costs, but in messy business data, expect diminishing returns.

Offline RL is the “safe” RL: train only on historical logs, no risky live exploration.

Businesses love this pitch, but offline RL needs high-quality, diverse logs, and most companies simply don’t have them.

I’ve tried it with clickstream data — the model worked well in simulation but collapsed in production.

Bottom line: offline RL sounds nice, but most teams won’t have the data scale to pull it off.

Tweaking reward signals to guide agents faster is tempting.

I once “shaped” rewards in a pricing model, thinking it would encourage better long-term revenue.

Instead, it gamed the metric and tanked customer satisfaction.

Reward design is often the hardest part of RL.

Sometimes a simple rules-based system + supervised model beats a fancy RL setup.

I’ve seen fraud detection pipelines where RL was overkill, burning GPU hours, while a logistic regression + a few domain rules caught 95% of fraud with less than 10% the cost.

The point is, don’t chase hype when rules and labels work just fine.

Hybrid patterns give flexibility, but they’re not free wins.

Each carries hidden costs — from data quality issues to reward design headaches.

If you’re considering one, ask: “Do I have enough data, the right expertise, and a business problem that actually needs it?”

If not, a lean supervised model will take you further, faster.

Common pitfalls and hidden costs

Let’s be brutally honest here—reinforcement learning (RL) looks shiny on paper but the real world is far less forgiving.

One big trap is reward hacking. Agents often exploit loopholes in badly designed reward systems instead of actually solving the problem.

I remember hitting the same wall during a side project. I set up a toy trading environment and instead of maximizing returns, my RL agent learned to avoid making trades altogether because it got “rewarded” for minimizing losses. It looked smart at first but in reality, it was just cowardly AI.

Another often ignored pitfall is simulation mismatch. Training an agent in a simulated world is easy; deploying it in the messy real world is brutal.

I experienced this when I tried a cart-pole RL project—I got perfect balance in simulation but the physical hardware failed in seconds. That mismatch cost weeks of debugging and made me realize why most RL case studies remain stuck in research labs rather than real businesses.

Now compare that with supervised learning (SL)—the hidden cost here isn’t simulation but label drift. Models rely heavily on labeled data, but labels get outdated quickly.

I saw this firsthand when a friend’s e-commerce price-optimization model kept recommending discounts months after a seasonal sale was over because the labels in training data weren’t refreshed.

Another unspoken truth—talent scarcity. RL expertise is rare and expensive.

That means if you’re betting on RL, you’re also betting on hiring costs, slower timelines, and maintenance headaches. In contrast, supervised learning talent is plentiful, frameworks are stable, and you’ll find tons of pretrained models you can fine-tune in hours.

Dr. Andrew Ng once said, “If a problem can be solved with supervised learning, don’t use RL.” That might sound harsh, but he’s right—why burn months of compute and budget when a linear regression can deliver the same ROI?

My biggest regret in early projects was chasing the “coolness factor” of RL instead of sticking with simple supervised pipelines that worked.

Bottom line: RL’s pitfalls = reward hacking, simulation mismatch, talent scarcity. SL’s pitfalls = label drift, data dependency, hidden failure modes.

Both come with hidden costs, but the danger is falling for hype. You don’t need the fanciest hammer for every nail—you need the tool that actually fixes the business problem.

Real-world vignettes (short, business-focused case studies — keep them high level)

When we talk about reinforcement learning vs supervised learning, theory often sounds exciting but the real stories are where the truth hides.

A retail company wanted to predict customer churn. They had thousands of labeled past cases, clear outcomes, and an urgent need to reduce losses.

They picked a supervised model because it was fast, cheap, and effective. Within weeks, accuracy hit 85% and customer retention improved by 12%.

The criticism? These models age quickly—once patterns shift, retraining becomes constant overhead.

A ride-hailing startup tested dynamic pricing. Static supervised models failed—they optimized for immediate rides but lost long-term engagement.

RL stepped in, continuously adjusting fares based on environment feedback. The result was 20% higher long-term revenue and better driver satisfaction.

The painful part: building simulators cost millions, and training took weeks on GPU clusters. I tried experimenting with RL pricing on a side project, and honestly, I abandoned it after two months—too much infra, too little return for a student project. RL shines, but it demands deep pockets and patience.

Then comes the hybrid middle ground. YouTube recommendations, for example, don’t rely on pure supervised learning or pure RL—they combine both.

First, supervised models predict immediate relevance. Then RL fine-tunes for long-term engagement.

This combo saved them from short-term clickbait traps while maximizing session time.

When I built a small-scale recommender during my coursework, imitation learning, a supervised shortcut to RL, gave me 70% of the benefit without months of training.

That’s the hidden hack most don’t talk about: sometimes “good enough” hybrids outperform idealized pure RL.

In all three cases, the deciding factor wasn’t accuracy—it was cost, scalability, and ROI.

Use supervised when labels exist and speed matters. Use RL when sequential decisions drive real value.

And don’t be ashamed to pick hybrids—they’re often the smartest business move.

FAQ — Quick Answers for Busy Product & ML Teams

Is reinforcement learning always better for long-term objectives?

Not at all. RL shines in sequential decision tasks where actions affect future rewards, like dynamic pricing or game AI.

But if your goal is predicting user churn or classifying images, supervised learning is faster, cheaper, and less risky.

I learned this the hard way: my RL experiment to optimize email engagement failed spectacularly — a simple classification model got 3x better results in one day.

Can I “fake” RL using stacked supervised models?

Sometimes. Techniques like behavior cloning let you mimic expert actions using labeled datasets, but it won’t handle long-term delayed rewards well.

It’s a shortcut, not a replacement. I tried cloning a recommendation policy once — worked okay initially, but it collapsed after users started changing behavior.

How risky is exploration in production?

High risk. RL explores new strategies, which can backfire in live environments — think pricing errors or content you didn’t expect to serve.

That’s why many businesses use simulators first. In my own project, RL exploration once triggered 5,000 unnecessary API calls, costing $600.

What’s the minimum team to ship RL?

Honestly, you need more than a single ML engineer. Expect data engineers, ML engineers, simulation experts, and monitoring specialists.

Supervised models? Often one skilled engineer can ship a working product. I’ve personally seen teams underestimate this and burn months on RL experiments that could’ve been solved with supervised learning in weeks.

When should I consider hybrid approaches?

Use supervised pretraining + RL fine-tuning when you want stable initial performance and then improve long-term rewards.

Offline RL (training on logs) is safer than full online exploration. I applied this in a recommendation system: pretraining with historical user data gave 80% of the reward improvement, RL fine-tuning added the last 20% without breaking production.

Conclusion

Choosing between Reinforcement Learning (RL) and Supervised Learning (SL) isn’t about picking the flashiest algorithm; it’s about business impact, cost, and risk.

I’ve learned this the hard way—spending two months building a small RL prototype for a recommendation engine, only to realize a supervised model achieved 95% of the same performance with one-fifth the effort.

SL is fast, explainable, and cheap—perfect when you have labeled data and short-term decisions.

RL shines when rewards are delayed, decisions are sequential, and simulation is feasible, but beware of high compute costs, risky exploration, and complex maintenance.

Hybrid approaches—pretraining with SL, fine-tuning with RL—often deliver the best ROI without overcomplicating your stack.

My rule now: always map the business objective first, then pick the simplest model that meets it, not the fanciest one.

In my projects at Pythonorp, this mindset saved weeks of wasted engineering time while delivering measurable value to business stakeholders. 🔥

Actionable takeaway: run your decision framework, check data availability, evaluate risk tolerance, and choose the model that aligns with your product KPIs, not your ML dreams.