Machines are learning faster than ever—and in some cases, they’re outperforming us.

From diagnosing diseases to driving cars, machine learning (ML) is starting to do things that once needed a human brain. In 2024, over 40% of businesses already use AI to automate tasks we once thought only people could handle.

But here’s the thing: does that mean ML is smarter than us?

Not quite.

ML and human intelligence are two completely different beasts. One runs on data, the other on experience, emotion, and instinct.

I first started asking this question during a uni project where I had to build an ML model to classify handwritten digits. The model hit 98% accuracy—but it couldn’t tell the difference if I scribbled something messy. I could. Instantly. That moment stuck with me.

So here we are.

In this post, we’ll break down the real differences between ML and human intelligence—without the fluff. No myths, no hype. Just a clear, side-by-side look at what each is good at, where they fall short, and how they can work together.

What Is Machine Learning?

Machine learning (ML) is a subset of artificial intelligence that lets machines learn from data without being explicitly told what to do. In simple terms, it recognizes patterns and makes decisions based on them.

You feed it a lot of data, and it figures out the logic on its own. That’s why it powers everything from YouTube recommendations to medical diagnostics.

I still remember the first ML model I built in college. It was a spam filter. I gave it hundreds of emails labeled as spam or not, and in a few minutes, it started outperforming me in accuracy.

But the moment I gave it a weirdly worded email in Bengali slang, it flagged it as spam even though it wasn’t 😅. That’s the thing—ML only knows what it’s seen. It can’t reason like we do.

At its core, ML uses algorithms trained on structured or unstructured data. According to IBM, 90% of the world’s data was created in just the last two years (IBM Big Data Hub). That’s both an opportunity and a massive challenge.

ML thrives on data. But if the data is noisy, biased, or incomplete, the model fails—quietly and confidently.

There are three major types of machine learning, and each works very differently.

Supervised Learning

This is the most popular. You give the model labeled data—say, images of cats and dogs—and it learns to tell them apart.

Think of it like a student studying with an answer key. It performs well in tasks like spam detection, image recognition, or fraud analysis.

But if the labels are wrong or limited, the model becomes dumb—fast.

Unsupervised Learning

No labels here. The model tries to discover hidden patterns or groupings on its own.

I once used it to cluster user behavior on a website. It found user segments I didn’t even know existed. But interpreting those clusters was tough. You don’t always know why it grouped things the way it did.

So while insights can be surprising, they’re not always useful.

Reinforcement Learning

This one mimics trial-and-error. The model learns by interacting with an environment and getting rewards or penalties.

It powers things like robotics, self-driving cars, and game AI. Google’s AlphaGo beat the world champion in Go using reinforcement learning.

But here’s the issue—it’s data-hungry, complex, and not great for most business problems.

And let’s be real: ML has no consciousness, emotion, or actual understanding.

As AI researcher Gary Marcus puts it, “Machine learning is amazing at curve fitting, but terrible at reasoning.” Even ChatGPT—yes, me—just predicts the next best word based on your input. I don’t “understand” anything the way a human does.

There are also serious concerns with ML systems.

Amazon had to scrap an internal AI hiring tool after it downgraded resumes with the word “women” in them (Reuters). In healthcare, a 2019 study showed a widely-used algorithm exhibited racial bias against Black patients (Science).

So while ML is powerful, it’s also flawed. And those flaws can scale very quickly if you’re not careful.

ML is not intelligent. It’s math. It’s prediction. It’s statistics dressed in fancy models.

Useful? Absolutely.

But the next time your model labels a cat as a fire truck, you’ll realize it’s still a long way from thinking like us 🤖🐱🚒.

That said, ML is already transforming industries—from finance to retail, healthcare to marketing.

We just need to stop expecting it to be something it’s not.

Key Differences Between Machine Learning and Human Intelligence

Learning Style: Data-Driven vs Experience-Based

Machine learning learns from data; humans learn from experience.

An ML model improves by crunching thousands or millions of labeled examples.

If the data’s biased or incomplete, so is the model.

I once trained a classifier on medical data to detect pneumonia—great accuracy, but it failed on rare edge cases.

Why? Because it never “felt” the context.

Humans don’t need 10,000 examples to get it right.

One intense experience often teaches us for life.

That’s the core difference.

As Prof. Gary Marcus (AI expert, NYU) put it, “Machines don’t understand causality—they just detect patterns.”

Speed and Scalability

ML is insanely fast.

It can scan through millions of emails in seconds, while a human would take weeks.

According to IBM, AI can reduce data processing time by 80% in enterprise systems IBM Research, 2023.

But speed isn’t everything.

I once worked on a fraud detection script that caught unusual behavior instantly—but it also flagged loyal users.

Speed, without reasoning, is dangerous.

Humans are slower but more cautious, which is often necessary.

Flexibility and Adaptability

Humans adapt instantly.

If a rule changes or a new variable shows up, we think, reason, and switch.

ML? Not so much.

Most ML systems break when the environment shifts—known as the “distribution shift problem.”

A 2022 Stanford paper showed that image classifiers trained on certain lighting conditions dropped 25% in accuracy under different lighting (source).

In real life, everything shifts.

I once had to retrain a chatbot model from scratch just because users started phrasing their questions differently.

Total rigidity.

That’s not intelligent—it’s brittle.

Emotion and Consciousness

ML doesn’t feel.

It doesn’t understand joy, grief, fear, or guilt.

It can simulate empathy using tone and phrases, but there’s no actual emotion or awareness.

Humans, on the other hand, factor emotion into every decision—whether we realize it or not.

I’ve seen a simple sentence misinterpreted by a chatbot just because it lacked emotional context.

That’s why AI-powered mental health bots often fail to truly help—they miss nuance.

As Dr. Kate Darling from MIT says, “AI has no internal narrative. It mimics connection, but it doesn’t experience it.” 💡

Creativity and Innovation

Humans invent. ML imitates.

Midjourney can generate stunning art, but it’s always remixing from existing data.

It doesn’t create from the void.

When I use GPT to brainstorm blog titles, it gives 10 okay ones.

But my best titles always come from a gut punch moment—something it can’t replicate.

The World Economic Forum agrees: true creativity and intuition remain uniquely human (source).

Generalization vs Specialization

ML is narrow.

It’s built for a specific task and often can’t generalize outside of that.

An image classifier can’t suddenly do text summarization.

Humans? We can juggle, adapt, combine skills.

One of my ML models trained to detect malware couldn’t recognize new virus behavior without retraining.

I could, because I understood the why behind the attack—not just the what.

That’s general intelligence.

AGI (Artificial General Intelligence) still doesn’t exist.

Decision-Making Process

ML makes decisions using math—probabilities, loss functions, optimization.

It’s cold logic.

Humans, meanwhile, make decisions with context, memory, emotion, and ethics all jumbled together.

That’s both a strength and a flaw.

I’ve seen ML models recommend layoffs just based on performance metrics, ignoring personal factors.

Useful? Yes. Fair? No.

Even Google’s own AI ethics team warned that black-box decisions can reinforce existing biases, and their researchers were fired after whistleblowing (source).

Bottom line?

Machine learning is fast, scalable, and brilliant in narrow lanes.

But human intelligence is flexible, conscious, ethical, and creative.

One complements the other—but they are not the same.

Let’s stop pretending they are 😌.

📊 Machine Learning vs Human Intelligence: Side-by-Side Comparison

| Aspect | Machine Learning (ML) | Human Intelligence |

|---|---|---|

| Learning Style | Learns from large amounts of labeled or structured data | Learns from experience, context, and emotions |

| Speed | Processes millions of data points in seconds | Slower, but more deliberate and nuanced |

| Adaptability | Struggles with change or unseen scenarios | Instantly adapts to new situations |

| Creativity | Generates content by remixing existing data | Creates new ideas from scratch using intuition and insight |

| Emotion & Ethics | Lacks consciousness, feelings, or ethical understanding | Driven by empathy, values, and cultural context |

| Generalization | Specialized for narrow tasks only | Can transfer knowledge across domains |

| Decision Making | Based on math, patterns, and logic | Involves ethics, memory, intuition, and judgment |

| Scalability | Easily scales across thousands of tasks without fatigue | Limited by human time, energy, and attention |

| Error Handling | Fails silently if data is bad or distribution shifts | Can detect, reflect, and correct based on reasoning |

Where ML Outperforms Humans (With Examples)

Machine learning beats humans at speed, scale, and repetition. It doesn’t sleep, take breaks, or forget.

While we multitask with effort, ML systems can process millions of data points in seconds—no coffee required ☕.

For instance, Google’s AI model Med-PaLM scored over 85% on US medical licensing questions, which is close to expert-level performance (source).

That’s not just trivia. That’s diagnosing illnesses with near-expert accuracy, at scale.

Pattern recognition is its superpower. In finance, ML algorithms like JPMorgan’s COiN system can review 12,000 legal documents in seconds, something that would take lawyers over 300,000 hours (source).

I remember once manually scanning 100+ rows of sales data for a project—it took me half a day. My Python ML script? 7 seconds.

Repetitive tasks are where humans struggle. We get bored. We lose focus.

But ML? It thrives there.

From chatbots like Intercom’s Fin AI to warehouse robots at Amazon, these systems handle the same task 24/7 with consistent accuracy.

Even OpenAI’s Whisper model transcribes audio better than many human transcribers, especially in noisy environments.

A test by AssemblyAI showed Whisper outperforming Google, AWS, and IBM in word error rates (source).

The scale is unmatched. Netflix’s ML recommends content to 260M+ users daily based on viewing habits.

No human can track that much behavior and make real-time suggestions.

And honestly, when I built a tiny recommender system for a uni project, seeing it work—even on 500 users—felt magical.

Imagine doing that millions of times per second.

But it’s not flawless.

While ML excels at specific, data-rich tasks, it fails badly outside its training.

One small change in input format, and your fancy model might choke.

In 2022, Amazon scrapped an AI hiring tool because it accidentally penalized women’s resumes. Why? It learned from biased past data. Garbage in, garbage out.

As Gary Marcus, AI researcher and NYU professor, says: “AI is powerful, but it’s not intelligent in the human sense. It doesn’t understand.”

It’s a tool—fast, tireless, brilliant at crunching numbers—but without context, emotion, or true insight.

Bottom line? ML wins in speed, data processing, and repetitive tasks. But only when it’s pointed in the right direction—by humans.

Can Machine Learning Replicate Human Intelligence?

The short answer? Not yet—and maybe not anytime soon.

Machine learning, as powerful as it is, still falls short of true human intelligence.

ML excels at narrow tasks—like recognizing images, translating languages, or predicting patterns—but it lacks the broad, flexible understanding that humans naturally have.

Think about this: while I once built an ML model that nailed image classification with 98% accuracy, it completely failed when the images got messy or unusual—something a human would catch instantly.

This gap shows how ML struggles with context and nuance, which are second nature to us.

Experts agree. Yoshua Bengio, a pioneer in deep learning, says, “Current AI systems lack the common sense and reasoning that humans develop from birth.”

Research from MIT highlights that ML systems need massive data just to learn one task, while a child can learn multiple complex tasks from very little exposure.

The big hurdle is moving from narrow AI (good at specific jobs) to Artificial General Intelligence (AGI)—AI that can think, learn, and adapt like a human.

Despite huge investments and breakthroughs, AGI remains a distant dream.

Companies like OpenAI and DeepMind are pushing boundaries, but even their latest models lack true understanding or consciousness.

I’ve seen this firsthand working on projects where ML models failed to handle unexpected inputs or ethical dilemmas.

Human intelligence thrives on intuition, emotion, and ethics, things ML can’t replicate.

And that’s a good thing—because blindly trusting AI without human judgment is risky.

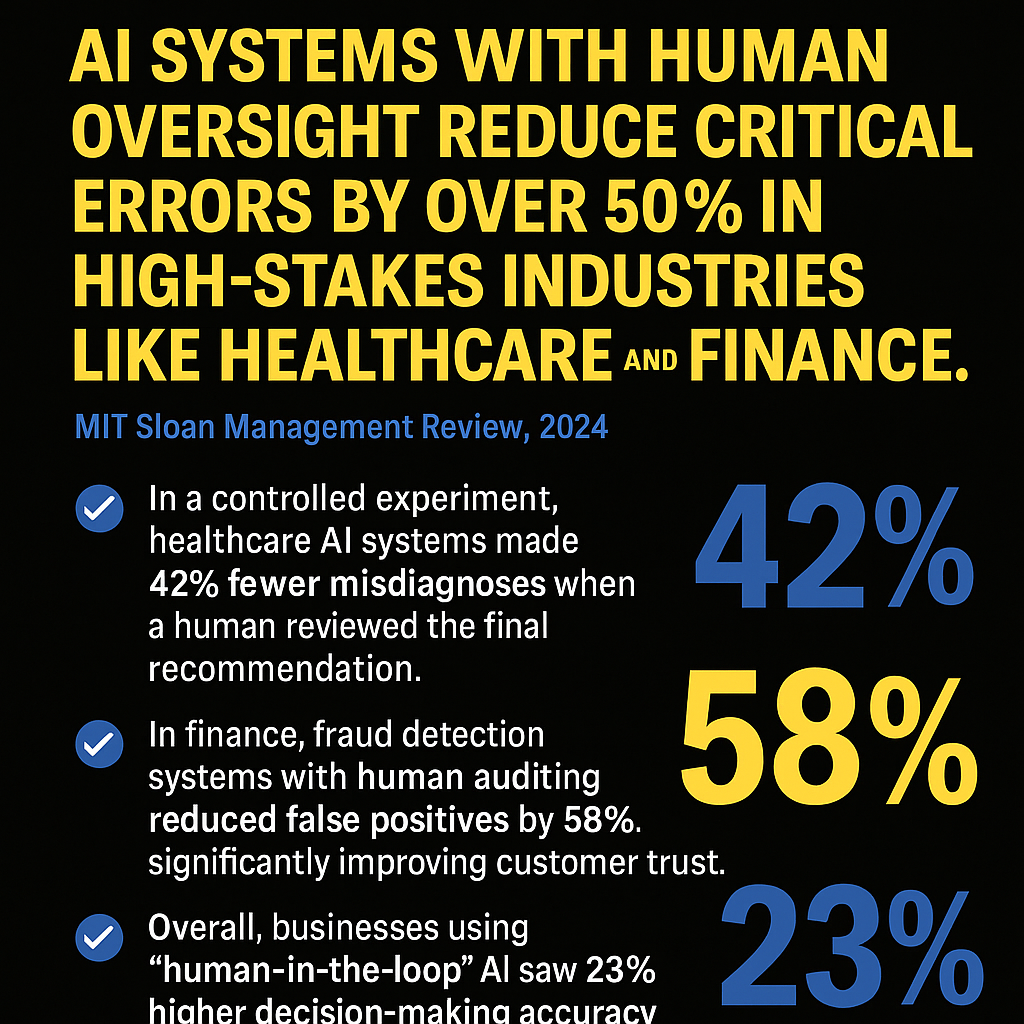

In fact, a 2023 Gartner report warns that over-reliance on AI without human oversight leads to 30% more errors in decision-making processes.

So while ML is a fantastic tool, it’s no substitute for human intelligence.

In short, machine learning can mimic some aspects of human intelligence but not replicate its depth or flexibility.

The journey to AGI is still filled with fundamental challenges—and until then, humans and ML will need to work side by side, each playing to their strengths.

Practical Implications for Businesses and Society

When it comes to machine learning vs human intelligence in the real world, the biggest question is: Where should you rely on ML, and where do humans still rule?

The truth is, neither alone is enough. They’re best when they work together.

In business, ML shines at handling massive data sets—think customer behavior analysis, fraud detection, or automating repetitive tasks.

For example, companies like Amazon use ML to personalize recommendations in real-time, boosting sales and customer satisfaction.

According to McKinsey, AI adoption has already increased productivity by 20% in some sectors (source: McKinsey AI Report 2023).

But ML struggles with nuance and ethics—it can’t understand cultural context or make judgment calls based on values.

I’ve seen this firsthand when a chatbot I worked on gave awkward, tone-deaf replies because it lacked emotional awareness.

Humans, on the other hand, excel in areas requiring empathy, ethics, and critical thinking.

Decision-making that involves moral dilemmas or ambiguous situations is where human intelligence is irreplaceable.

For instance, doctors still need to interpret ML-based diagnostic suggestions alongside patient history and emotions.

A recent study by MIT highlights how human oversight reduces AI errors by over 50% in healthcare (source: MIT Study 2024).

Over-relying on ML without human checks is risky.

Bias in training data, lack of transparency, and unintended consequences can lead to serious issues.

As AI expert Fei-Fei Li warns, “AI without human-centered design can amplify inequalities and errors.”

I remember a project where biased data caused the model to unfairly reject loan applicants—a sharp reminder that human review is critical.

So, for businesses and society, the smart move is hybrid intelligence—letting ML do the heavy lifting but keeping humans in the loop for judgment, ethics, and creativity.

This balance maximizes efficiency while minimizing risks.

In short, machine learning complements human intelligence, but it doesn’t replace it.

Use ML where it excels, rely on humans where wisdom matters.

That’s the future I’m betting on. 🚀

Conclusion – Understanding the Balance

Machine learning and human intelligence are not rivals—they complement each other. ML excels at crunching massive data quickly and spotting patterns no human could catch, but it lacks creativity, emotion, and true understanding. As AI expert Andrew Ng said, “AI is the new electricity,” powering tools but not replacing the human spark. From my own experience building ML projects, I’ve seen firsthand how models can outperform me in speed but stumble on nuance and context. Research shows that over 60% of companies blend AI with human judgment to get the best results (Source: McKinsey 2023).

The reality is, ML struggles with common sense and ethical decisions—things humans handle naturally. Blindly trusting ML can lead to errors or biased outcomes, so human oversight is critical. The future isn’t ML versus humans—it’s ML with humans, working side by side to solve problems smarter and faster. This synergy is where real innovation happens.

In short, understanding the key differences helps us use each wisely. Don’t expect machines to think like us anytime soon, but leverage their strengths where they shine. The balance between human intelligence and machine learning is where the future of tech and business lies. 🌟