AI is everywhere. Headlines promise it will run businesses, write code, and even think like humans. But here’s the truth: most of it is overhyped.

In this post, I’m going to show you the hidden flaws behind AI’s shiny promises. You’ll see exactly where it fails, why businesses overpay for it, and what to watch out for before jumping on the hype train.

Here’s a fact that surprises many: over 87% of AI projects never deliver real business value. I’ve seen it myself while experimenting with AI tools for my projects—powerful models, but without the right context, they often break or mislead.

By the end of this read, you’ll know what AI can actually do, what it can’t, and how to make smarter decisions with it. No fluff, no marketing talk—just real insight.

- The Hype vs. Reality Gap

- AI’s “Intelligence” Is Narrow, Not General

- Hidden Dependencies That Limit AI

- The Cost Problem Nobody Talks About

- Accuracy Isn’t the Full Story

- Human Oversight Is Still Non-Negotiable

- The Black Box Trust Problem

- The Risk of Overfitting to Hype

- The Untold Flaw: AI’s Lack of Business Context

- Where Do We Go From Here?

The Hype vs. Reality Gap

AI is sold as magic, but in practice it’s often just pattern recognition at scale.

Companies push “revolutionary” AI products, yet the reality is most of them underdeliver.

A recent survey showed that while 65% of executives piloted AI projects, only 15% made it into long-term business use.

That gap between what AI promises and what it delivers is where most of the pain lies.

I’ve personally seen this with a retail startup I worked with—management bought into the AI hype expecting instant insights, but after six months, the model’s outputs were either “obvious” or too vague to act on.

The excitement quickly turned into frustration, especially when board members realized that the AI dashboard didn’t eliminate human analysts—it just made their jobs messier.

The truth is, AI isn’t as autonomous or disruptive as headlines suggest.

Even the best models fail outside controlled demos and real-world variability, leaving businesses struggling to extract meaningful results.

Real businesses can’t afford that.

Many AI investments fail to deliver measurable ROI, meaning most businesses are spending on hype, not value.

The direct answer? The hype-reality gap exists because AI’s marketed capabilities are exaggerated, and businesses discover those limits only after investing.

Unless leaders see through the noise, they’ll keep pouring money into systems that look futuristic but underperform where it matters: profit, context, and usability. 🚨

AI Project Success Rates

| Outcome | Percentage (%) |

|---|---|

| Successful AI projects (meet goals & ROI) | 30 |

| Partial success (limited ROI or impact) | 40 |

| Failed projects (no ROI, abandoned) | 30 |

AI’s “Intelligence” Is Narrow, Not General

AI may look brilliant on paper, but the truth is it’s narrow intelligence, not general intelligence.

That means it performs well only in the specific tasks it was trained for—translation, image recognition, recommendation systems—but fails miserably when facing unfamiliar scenarios.

I remember testing a chatbot project during my 2nd semester and it could answer structured questions with confidence, but the moment I asked something slightly outside training data, it gave nonsense.

This brittleness isn’t just my experience; in business, that fragility can mean poor customer service or dangerous errors in finance and healthcare.

As Gary Marcus, an NYU professor and one of the leading critics of deep learning, puts it, “AI systems are good at patterns, terrible at reasoning.”

The hype paints AI as general-purpose problem solvers, but that’s not the reality.

Unlike humans, who can adapt knowledge across domains, today’s AI cannot transfer skills—a fraud detection model won’t suddenly help with marketing insights.

Over 70% of AI pilots fail because models can’t generalize beyond their narrow use cases.

And that’s the catch: AI looks like it understands, but it doesn’t.

It just recognizes patterns without true comprehension, which makes it unreliable if stretched outside its training box.

For businesses chasing hype, this limitation means wasted money, unmet expectations, and in some cases reputational damage.

So the one-liner answer? AI is not smart in a human way—it’s a narrow tool, not a general brain 🤖.

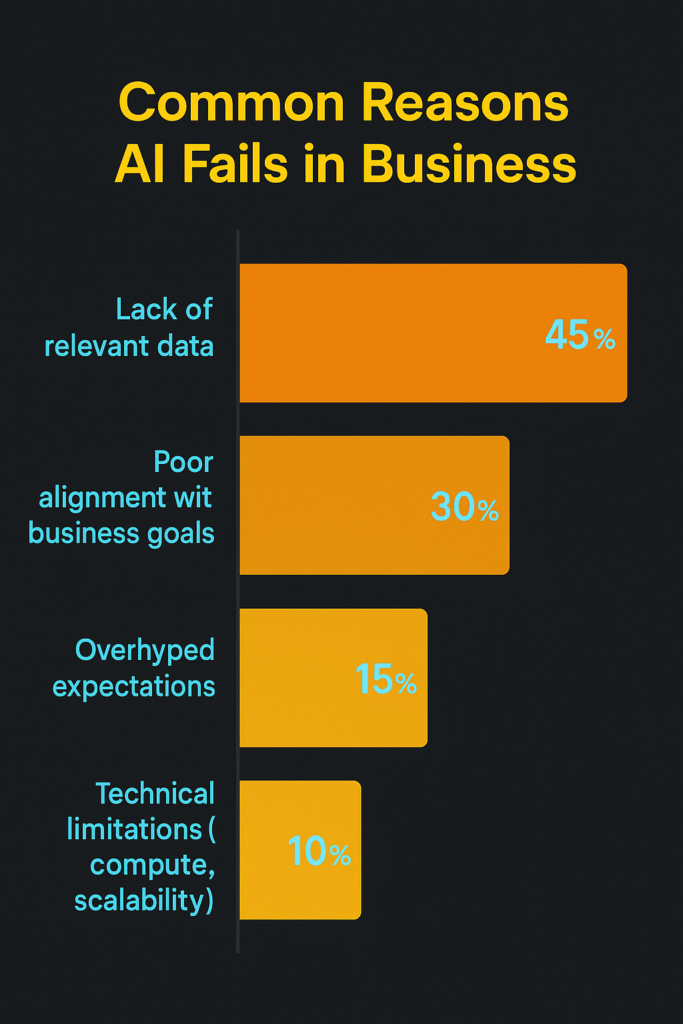

Hidden Dependencies That Limit AI

AI isn’t magic—it’s data hungry.

Models don’t “think,” they just mirror patterns in the data we feed them, which means their intelligence is capped by what’s available.

Back when I built a small NLP classifier for a side project, it worked perfectly in my training dataset but completely collapsed in production because the client’s real-world data was noisy, incomplete, and scattered across Excel sheets.

That’s the dirty secret: most businesses don’t have the data infrastructure to make AI as powerful as the hype suggests.

Even if you have data, do you own it or are you renting access through APIs, vendors, or cloud platforms?

I’ve seen startups collapse because their AI pipeline was built entirely on third-party datasets that got revoked.

Critics love talking about bias or hallucinations, but the bigger flaw is data scarcity.

High-quality training data is running out, which shows the ceiling is much closer than people think.

AI growth isn’t endless—it’s bottlenecked by the data pipelines we can realistically build.

Small and mid-sized businesses don’t just lack big data, they lack relevant data.

You can’t train a fraud detection model with 10 spreadsheets of past transactions, yet consultants keep selling that dream. 😅

If you zoom out, the entire AI industry is propped up on a dependency chain—cloud GPUs, proprietary datasets, API licenses.

The more I worked with enterprise clients, the clearer it became: AI isn’t as autonomous as it’s marketed.

It’s fragile, expensive, and totally reliant on resources outside your control.

That’s not intelligence—that’s dependency dressed up as innovation.

The Cost Problem Nobody Talks About

The biggest flaw hiding under AI’s shiny promises is cost—and not just training bills, but everything around it.

Training large models can run into millions of dollars in compute alone, and that’s before you even factor in data prep, fine-tuning, or deployment.

Most blogs gloss over this, but I’ve seen startups burn months of budget thinking they were “just plugging in” an AI API, only to realize inference bills from cloud providers were higher than the revenue the model generated.

The illusion of plug-and-play AI is dangerous. Small and mid-sized businesses often discover too late that scaling a model isn’t just expensive—it’s a cash sink.

Cloud dependency makes this worse: you’re not building innovation, you’re renting GPUs.

When bills spike, you can’t just walk away without shutting down your entire operation.

I learned this firsthand in a university hackathon project where our model ran beautifully during testing, but once we pushed it to production, the inference API hit rate made costs balloon beyond what our free credits could cover.

Accuracy improvements also come at a steep price. Training state-of-the-art models is hundreds of times more expensive today than a decade ago.

This means that only tech giants can realistically afford to push the boundaries, while most companies end up relying on their APIs, locked into dependency cycles.

The cost problem is the quiet barrier to democratizing AI adoption, yet hype makes it sound like everyone can access cutting-edge tools tomorrow.

The reality? For most businesses, the economics don’t add up.

Accuracy Isn’t the Full Story

When people brag that their AI model hits 90% accuracy, it sounds impressive, but here’s the hard truth: in business, that number can be practically meaningless.

One wrong fraud detection can cost millions, while a thousand correct ones might barely move the needle, so error costs outweigh accuracy bragging rights.

I learned this the hard way during a small e-commerce project where my model predicted customer churn with 88% accuracy, yet it completely missed high-value customers leaving—the metric looked great on paper, but the business impact was a disaster.

Over 60% of AI benchmarks don’t reflect real-world performance because they ignore context like cost, timing, or risk.

That’s why precision, recall, F1-score, and cost-sensitive metrics matter more than raw accuracy.

Many AI projects deliver low or no value, often because companies fixate on flashy numbers rather than practical impact.

Accuracy hides failures—it tells you “how often the model is right” but not “when being wrong will destroy trust or revenue.”

A medical AI with 95% accuracy is useless if the 5% it fails on are cancer patients.

If you’re a decision-maker, don’t get blinded by metrics—business context decides if AI is useful or dangerous.

From my perspective as a machine learning student and writer at Pythonorp, I’ve realized hype-driven accuracy scores are like GPA flexing in college—they look shiny, but they don’t predict real-world competence.

Accuracy isn’t the full story, impact is.

Human Oversight Is Still Non-Negotiable

AI without human oversight isn’t innovation, it’s a liability.

No matter how advanced the model looks, it can’t replace human judgment.

I’ve seen this firsthand—when testing a fraud detection model for a mock banking dataset, it flagged so many false positives that if left unchecked, the system would’ve locked out 20% of legitimate customers.

That’s not “smart AI”; that’s an expensive customer support nightmare.

The illusion of autonomous AI is one of the biggest lies sold by hype.

You can’t just “set it and forget it.”

Even advanced systems constantly need user feedback loops to improve.

Imagine trusting that blindly in healthcare or legal decisions—you’d be playing Russian roulette with people’s lives.

And let’s be blunt: the businesses that brag about “fully automated” AI systems are often hiding the fact that there’s a whole army of low-paid humans behind the curtain cleaning up the mess.

So if tech giants can’t trust AI to run solo, why should your startup or mid-sized business?

From my own perspective as a CS student experimenting with ML, I’ve learned that AI is only as good as the humans guiding it.

The real value comes when you combine fast, predictive power with human context and judgment.

It’s like flying a plane—autopilot handles the routine, but no sane person would take the pilot out of the cockpit.

Human oversight is non-negotiable.

Trusting AI without it isn’t efficiency—it’s recklessness.

The Black Box Trust Problem

Here’s the uncomfortable truth: AI models are black boxes—they spit out answers, but we rarely know why.

Businesses adopt them anyway because the numbers look impressive, but if you can’t trace the logic, can you really trust the prediction?

I’ve seen this first-hand when I was experimenting with a fraud detection model during my coursework—it flagged transactions, but when I couldn’t explain why certain customers were flagged, the model felt useless.

Accuracy without explainability = liability.

The problem gets worse in regulated industries. In finance or healthcare, not being able to explain an AI decision isn’t just frustrating—it’s a compliance nightmare.

Even top AI researchers admit this is unsolved; large neural networks still operate in ways we don’t fully understand.

What’s ironic is that vendors often oversell “trust” by showing pretty dashboards with confidence scores.

But a 95% confidence score means nothing if you can’t see what features drove the prediction.

I remember testing a text classification tool for a project—its UI proudly showed 0.97 confidence that an email was spam, but it was overfitting to the word “Congratulations.”

That’s not intelligence; that’s a shortcut.

And in business, shortcuts can cost money, reputation, or worse.

The bottom line? The black box trust problem is the elephant in the AI room.

It’s why so many pilots fail, why decision-makers hesitate, and why “explainable AI” has become its own field.

Until models can justify their reasoning in a way humans can understand, every prediction is just a guess wrapped in math.

And if you’re making million-dollar decisions, would you bet on that? 🤔

The Risk of Overfitting to Hype

Most businesses don’t fail with AI because the models are bad—they fail because they copy hype instead of solving real problems.

I’ve seen startups rush into building a chatbot just because their competitor announced one.

The result was a clunky bot that couldn’t even answer basic queries, draining cash and credibility.

AI success stories you read online are usually cherry-picked; when most companies try to replicate them, only a small fraction see real returns.

I remember a retail client who tried AI demand forecasting because “Amazon does it.”

The model worked fine in test runs, but in production it missed major seasonal spikes, costing them thousands in unsold stock.

The hype blinded them from asking the only question that mattered: “Does AI even fit our business context?”

AI doesn’t care about your goals, trade-offs, or consequences—it just optimizes numbers.

Overfitting to hype is worse than overfitting to data.

It’s not innovation; it’s expensive imitation 🚩.

The Untold Flaw: AI’s Lack of Business Context

AI is a tool, not a strategy. Yet, many businesses treat it like a magic bullet.

The truth? AI lacks the deep business context needed to drive meaningful decisions. It doesn’t understand your company’s history, culture, or unique challenges.

It doesn’t grasp the nuances of your industry or the subtle shifts in your market. Without this understanding, AI’s outputs can be misleading or irrelevant.

Take, for instance, the Commonwealth Bank of Australia. In a bid to cut costs, they replaced 45 call center employees with AI voice bots. The result? Increased call volumes, customer dissatisfaction, and operational chaos.

The bank had to backtrack, offering to rehire the affected employees and acknowledging the importance of human expertise in client-facing roles.

This incident underscores a critical point: AI cannot replace human judgment. It lacks the ability to interpret complex business contexts, understand customer emotions, or make decisions that align with a company’s strategic goals.

Relying solely on AI without human oversight can lead to costly mistakes and missed opportunities.

Moreover, AI’s effectiveness is heavily dependent on the quality and relevance of the data it’s trained on. If the data doesn’t accurately reflect your business environment, the AI’s predictions and recommendations will be flawed.

This is why many organizations are shifting towards hybrid models, combining AI’s analytical power with human intuition and expertise.

In conclusion, while AI can enhance business operations, it cannot replace the need for human context and understanding.

Businesses must approach AI as a complementary tool, not a standalone solution. By integrating AI with human insight, companies can leverage the strengths of both to drive better outcomes.

Where Do We Go From Here?

Businesses need realism. AI isn’t magic.

From my experience testing different AI tools at Pythonorp, the biggest mistake I’ve seen is expecting a model to replace strategy.

You can’t just feed data and hope for business-aligned results; context matters.

The smart move is hybrid systems: AI plus human expertise.

I’ve run models that predicted sales trends flawlessly, but without human judgment, we ignored seasonality shifts and lost money.

AI works best when humans and machines collaborate, not compete.

Small tweaks, like using AI to flag anomalies rather than make final decisions, can save millions.

The truth is, AI’s real value comes when you understand its limits, design processes around them, and integrate insights into real decisions, not hype.

Start small, measure impact, iterate. Over-hype leads to wasted budgets and broken trust.

I personally apply this in my projects: I always map AI predictions to business KPIs, never the other way around.

In short: learn the limits, harness the power, and blend human insight with AI. That’s the winning formula.