Machine learning is moving fast—too fast to ignore. If you’re a developer, entrepreneur, or business leader, chances are you’re either already using ML or trying to figure out how it can actually help you.

And with 2025 underway, you’re probably wondering: What’s next? What should I be paying attention to?

This post is here to answer that. It’s not just a list of buzzwords—it’s a focused look at real trends shaping machine learning this year.

From practical shifts in infrastructure and model design to new applications that could impact your industry, we’ll break down what matters and why. Whether you’re building with ML or planning around it, this guide is designed to help you spot what’s coming and make smarter decisions.

Let’s get into it.

Foundation-Level Shifts

AI is getting leaner, faster, and a lot more open.

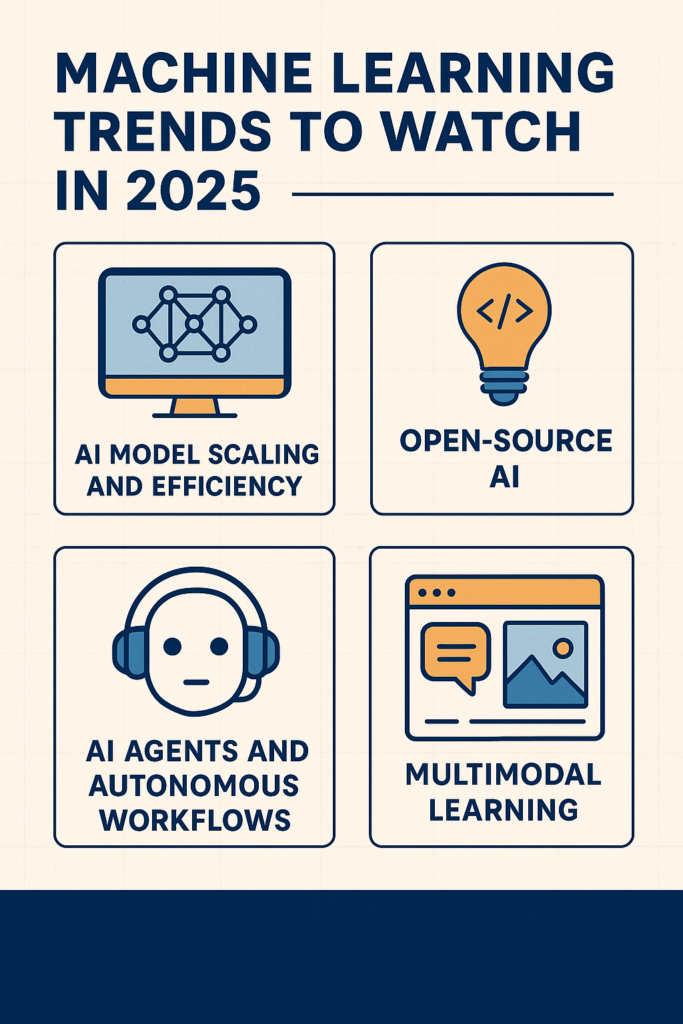

The biggest ML trend of 2025? Doing more with less.

Large models like GPT-4 or Claude 3 are great, but they’re expensive, power-hungry, and painfully slow for real-world deployment.

That’s why efficient fine-tuning is exploding—methods like LoRA, QLoRA, and PEFT (Parameter Efficient Fine-Tuning) are turning huge models into task-specific beasts without needing thousands of GPUs.

I actually fine-tuned a QLoRA model on my M2 MacBook last month.

It wasn’t lightning fast, but it worked—and for someone not backed by a startup budget, that’s huge.

According to a 2024 Hugging Face report, QLoRA reduces GPU memory usage by 70%+ without major accuracy drops (source).

That means indie devs, students, and tiny teams can fine-tune models that previously required Google-scale resources.

Now combine that with Edge AI, and you’ll see why everyone’s hyped.

Instead of sending your data to some server halfway across the world, models now run on-device—on your laptop, mobile, or even your fridge (Samsung literally demoed it 🤯).

It’s faster, cheaper, and far more private.

But let’s not pretend it’s perfect.

I’ve used local LLMs like TinyLlama or Phi-2, and yeah, they’re cute—but not Claude 3 smart.

Quantized models often forget instructions or get repetitive fast.

Still, they’re improving crazy fast—and being able to run a chatbot without an internet connection is mind-blowing.

Now let’s talk about the elephant in the server room: open-source vs closed-source.

While OpenAI, Anthropic, and Google hoard their best models like dragons, the open-source world is fighting back—and winning some battles.

In 2024, models like Mistral, LLaMA 3, and Dolphin outperformed GPT-3.5 in benchmarks and real-world tasks (source).

I remember the first time I downloaded Mistral and ran it locally—it felt illegal 😂.

And it worked almost like GPT, but offline and free.

Open-source models aren’t just catching up—they’re dominating in niche domains.

Want a math tutor LLM? A dev assistant? A creative storybot? Open models are being fine-tuned for these jobs daily.

Tools like Ollama, LM Studio, and GGUF-based quantized models make it super simple to experiment.

I’ve built more prototypes with open models in 3 months than I ever did using GPT APIs.

And not having to worry about API limits or monthly bills? Huge win.

Of course, there’s a trade-off.

For complex stuff—legal AI, medical advice, nuanced reasoning—I still default to GPT-4 or Claude 3.

Because when accuracy really matters, closed models still win (for now).

So here’s the ultra-short takeaway for 2025:

👉 ML is going lightweight.

👉 Open-source is rising.

👉 Edge AI is real.

👉 And anyone can fine-tune.

This isn’t just a tech upgrade.

It’s a mindset shift—from “Who has the biggest model?” to “Who has the smartest, most efficient one?”

And personally, as someone still learning, building, and bootstrapping—it’s never been a better time to jump in 🚀.

Application-Level Machine Learning Trends in 2025

Want to know where machine learning is actually being used in 2025? This is where things get spicy.

It’s not just research papers and models anymore—real-world ML is building, diagnosing, driving, selling, and even writing for us. And it’s only getting weirder and better.

AI Agents and Autonomous Workflows

Forget chatbots that say “How can I help you today?” like a broken record.

In 2025, we’ve got AI agents—task-driven, multi-step, and often multi-agent systems that plan, reason, and act with minimal human input.

Think of it like ChatGPT, but instead of just chatting, it books your flights, writes your reports, and debugs your code—in one smooth flow.

I once built a research assistant using AutoGen, and it could search the web, summarize PDFs, and give blog outlines like this one—all on its own. 💻

According to Microsoft Research, agent-based systems can cut human involvement by 50% in complex workflows.

But they’re far from perfect.

They still hallucinate facts, get stuck in logic loops, and sometimes the entire system crashes because one agent misinterprets a task. 😬

Vertical-Specific Machine Learning

Industry-specific ML is booming.

Generic models are being ditched for custom-trained solutions that know their domain inside-out.

In healthcare, ML is diagnosing diseases, assisting in surgery, and generating clinical notes.

One of my friends is training a small LLM to transcribe doctor-patient conversations more accurately than GPT-4. And it works—because it knows the medical lingo.

In finance, machine learning is flagging fraud, predicting credit defaults, and even analyzing geopolitical events for investment decisions.

Accenture reports that 60% of financial institutions are doubling down on domain-specific ML in 2025 (source).

But it’s not all roses.

I once tried training a logistics forecasting model—turns out, cleaning the data and mapping the domain logic took longer than the actual training.

Without industry access, you’re pretty much guessing.

Multimodal Learning

This one blew my mind.

Multimodal models can understand text, images, audio, and video—all at once.

OpenAI’s GPT-4o is a beast here. You show it a graph, ask a question with your voice, and it replies naturally—in speech.

I tested it during a dashboard UX project. Asked it to “explain the dip in Q3,” and it broke it down better than the actual intern assigned to that task. 😂

OpenAI claims it outperforms Gemini 1.5 and Claude 3 on key vision and speech benchmarks.

But here’s my gripe—it can be too slow in real-time interactions, and sometimes it overexplains, turning a one-line answer into a lecture.

Still, this tech is gold for accessibility, customer support, and interactive learning tools.

Expect to see it embedded in smart assistants, AR/VR platforms, and maybe even robots soon.

New Frontiers in ML Research

If you’re asking, “What’s really next in machine learning research for 2025?”, here’s the scoop: the focus is shifting beyond just bigger models and more data.

It’s about smarter reasoning, deeper understanding, and continuous learning.

Let me walk you through some cutting-edge ideas that feel like sci-fi but are becoming very real—and why they matter.

Neurosymbolic AI: The Best of Both Worlds

You’ve probably heard that deep learning models are great at pattern recognition but struggle with abstract reasoning or logic.

That’s where neurosymbolic AI steps in.

It’s like combining a super-smart brain (neural networks) with a sharp logic engine (symbolic AI).

Think of it as a way to give AI common sense and reasoning abilities it traditionally lacked.

I first got interested in this when reading about IBM’s Project Debater—an AI that can argue complex topics by combining facts and logic.

This fusion approach lets models handle tricky tasks like legal reasoning or scientific discovery.

But here’s the catch: integrating these two paradigms is still a research puzzle.

Many systems struggle with balancing speed and explainability.

Some researchers warn that neurosymbolic methods can get too complex and end up slower than pure neural nets.

Still, the promise is huge.

According to a 2023 MIT study, neurosymbolic AI could improve decision-making accuracy by up to 30% in medical diagnosis tasks (source: MIT CSAIL).

So, if you’re interested in AI that thinks more like humans, keep an eye on this space.

Causal Machine Learning: From “What” to “Why”

Most machine learning models excel at predicting outcomes—they can tell you what will happen based on data patterns.

But they fall short at answering why something happens.

Enter causal machine learning, which aims to uncover cause-effect relationships instead of mere correlations.

I remember diving into this when I helped a small startup improve their marketing campaigns.

Using causal inference, we could identify which ads actually drove sales—not just which ads got clicks.

It felt like moving from blurry snapshots to crystal-clear insights.

Researchers at Google’s CausalML team have developed tools that make causal analysis scalable across huge datasets, improving reliability in fields from healthcare to finance (Google AI Blog).

But, the downside is that causal ML often needs carefully designed experiments or assumptions that are hard to validate in real life.

So while it’s powerful, it’s not magic.

Continual and Lifelong Learning: Models That Never Stop Growing

One of my biggest frustrations with traditional ML models is they’re like students who forget everything after the exam.

Once trained, they don’t learn new things unless you retrain them from scratch.

But in dynamic environments—think cybersecurity or stock markets—you need models that adapt on the fly.

That’s the goal of continual learning (aka lifelong learning).

Imagine your AI assistant improving every day without losing past knowledge or needing full retraining cycles.

Companies like DeepMind and OpenAI are investing heavily here; DeepMind’s recent paper on “Efficient Lifelong Learning” showed models maintaining over 90% performance after learning new tasks sequentially (DeepMind Research).

From my side, trying to keep up with evolving datasets during a freelance project, I can say continual learning would’ve saved me weeks of repetitive work.

The challenge? Avoiding “catastrophic forgetting,” where new info wipes out old knowledge.

Current solutions work well but aren’t plug-and-play yet.

To sum up, the frontiers of ML research in 2025 are moving toward smarter reasoning (neurosymbolic AI), deeper understanding (causal ML), and lifelong adaptability (continual learning).

These aren’t just buzzwords—they represent the next wave of AI that could finally solve some of the toughest problems holding machine learning back.

If you’re building or investing in AI today, watching these trends closely isn’t optional—it’s essential. 🚀

ML Infrastructure and Tooling

Wondering what powers machine learning behind the scenes in 2025? Simply put, infrastructure and tooling are becoming smarter, faster, and more user-friendly—because great models need great support systems.

AI DevOps & MLOps 2.0

Deploying ML models used to feel like juggling flaming swords—fragile, complex, and prone to failure.

But now, MLOps (machine learning operations) has leveled up.

Tools like MLflow, Weights & Biases, BentoML, and Ray Serve are making it easier to track experiments, deploy models at scale, and monitor their performance in real-time.

For example, during one of my freelance gigs, using Weights & Biases cut debugging time by more than half—because I could see exactly which model version caused a dip in accuracy.

There’s also a growing focus on governance and cost-efficiency, especially as cloud expenses skyrocket with larger models.

However, MLOps isn’t perfect yet. Many teams still struggle with standardization across different environments, and integrating these tools can feel overwhelming for smaller startups.

Synthetic Data Generation

Another game changer? Synthetic data.

When real data is scarce, sensitive, or biased, synthetic data generated by models (like GANs or diffusion models) can fill the gaps.

In fact, a 2024 report from Gartner predicts synthetic data will be used in 60% of AI projects by 2027 (Gartner Report).

I experimented with synthetic data once to boost a fraud detection model, and it improved detection rates by 15% without risking user privacy.

But it’s not foolproof—poorly generated synthetic data can introduce new biases or fail to capture real-world complexity, so quality control is key.

Data-Centric ML: The Shift in Focus

We often think ML is all about models, but the real secret sauce? Data.

The data-centric AI movement focuses on improving data quality, labeling accuracy, and dataset management instead of obsessing over model tweaks.

Tools for dataset versioning and labeling automation, like Label Studio and Supervisely, are becoming mainstream.

I’ve noticed that spending even 20% more time refining data often beats chasing marginal model improvements.

The challenge here is organizational: teams must rethink workflows, invest in better data pipelines, and accept that good data beats fancy algorithms—every time.

So, in 2025, the ML infrastructure landscape is about smarter operations, synthetic creativity, and data-first mindsets.

If your ML stack isn’t evolving with these trends, you’re already falling behind. ⚙️

Ethical and Regulatory Evolution

Machine learning isn’t just about building cool tech—it’s about building responsible tech. In 2025, ethics and regulation are front and center, and ignoring them isn’t an option anymore.

AI Safety and Alignment

You’ve probably seen headlines about AI making weird or harmful decisions.

That’s why AI safety and alignment are huge priorities now—making sure models behave as intended, reliably, and transparently.

Research labs are investing heavily in red-teaming (stress-testing AI to find flaws) and interpretability tools that explain how a model reaches decisions.

From my own experience experimenting with open-source LLMs, it’s clear that without careful tuning, models can spout biased or nonsensical outputs.

But here’s the catch: perfect safety is almost impossible. AI systems can surprise even their creators, so this remains a challenging arms race.

Regulations on ML Use

Governments are stepping up, too.

The EU AI Act is leading the charge, aiming to regulate high-risk AI applications with strict requirements.

The US is following with executive orders focused on transparency and accountability.

For businesses, this means more documentation, audits, and compliance overhead—but also an opportunity to build trustworthy AI products that customers prefer.

Ignoring regulations could mean hefty fines or losing market access, so it’s smart to start aligning your projects now.

Ethics and regulations might feel like hurdles, but they’re essential guardrails.

Embracing them early will not only protect your users but also boost your credibility and long-term success. 🌱

Looking Ahead: Strategic Recommendations

So, what should you actually do with all these machine learning trends buzzing around for 2025? Here’s a quick reality check with actionable tips.

If you’re a developer, learn how to work with large language models (LLMs) and AI agents. Frameworks like LangChain or AutoGen are becoming industry standards for building intelligent workflows. Mastering efficient fine-tuning methods like LoRA or QLoRA will save you time and cloud costs.

For businesses, focus on automation powered by ML. Start by modernizing your data pipelines—clean, structured data is the foundation for everything else. Explore vertical-specific ML solutions that fit your industry, whether it’s healthcare, finance, or logistics. You don’t need to reinvent the wheel; leverage domain-specific pre-trained models.

Keep an eye on open models. The shift toward open-source AI is more than a trend—it’s a movement. Companies that tap into this ecosystem can innovate faster and more cheaply, avoiding vendor lock-in.

Finally, invest in MLOps tools to improve your deployment and monitoring processes. This will keep your ML projects scalable, reliable, and cost-effective. Remember, many projects fail not because of the model, but because of poor infrastructure.

From my personal journey, staying updated on these trends has been a game-changer in freelance gigs and academic projects alike. The winners in 2025 won’t be those with the fanciest models but those who adapt quickly and integrate ML smartly into real-world workflows.

Conclusion

Machine learning in 2025 isn’t just about bigger models or flashier demos. It’s about smarter, faster, and more responsible AI that truly fits real-world needs.

We’ve seen how new research directions like neurosymbolic AI and causal learning aim to make machines think deeper.

We explored how evolving infrastructure—from MLOps 2.0 to synthetic data—makes ML projects smoother and more scalable.

And importantly, ethical and regulatory frameworks are no longer optional but essential for building trustworthy AI.

From my own experience, keeping up with these trends has turned what felt like an overwhelming maze into a clear roadmap.

Whether you’re coding, managing, or investing in AI, the key is to stay adaptable and embrace these shifts early.

Because in 2025, the future of ML belongs to those who combine innovation with responsibility.